Have you heard of “crawl budget”? What’s it all about? Although the term sounds a bit highfalutin, the concept is relatively simple. It refers to the number of URLs you give Google’s search bots to crawl.

When it comes to SEO and Google’s algorithms, the crawl budget is usually overlooked, and there are a lot of questions about it! For example, will increasing your crawl budget help your website get more organic traffic?

Below we’ll discuss the concept to determine how crawl budget optimization affects your SEO status. So let’s get started.

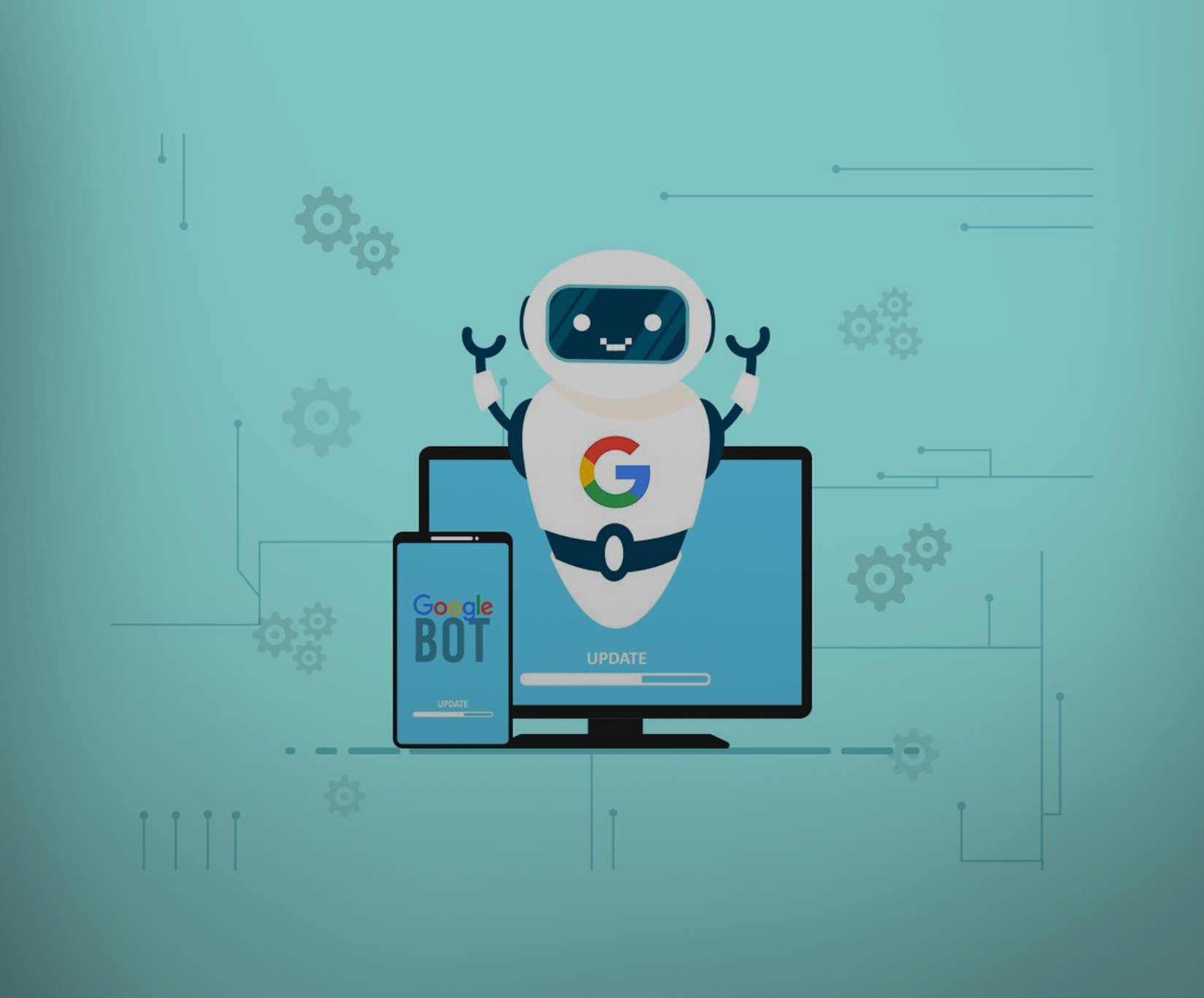

Crawling & Google Bots

Google sends Google bots to your web pages to read your content. But what are Google bots?

“Google bot” is a general term that refers to Google’s web crawlers that crawl your website to gather the information that is then used to index your website. There are two types of Google bots: desktop and mobile crawlers.

Reading your website allows Google to evaluate the content of your website and decide how relevant your website is to a search a user is typing in.

It is a common misconception that crawl efficacy and indexing are the same.

Crawl Budget

The crawl budget is nothing more than the number of pages (URLs) the Google bot crawls to index your website within a given time. These two factors determine the crawl budget:

- Crawl rate limit: A crawl rate limit specifies how often Google crawlers read your website.

- Crawl demand: The crawl demand refers to the number of web pages a crawler examines during a crawl session.

Is Crawl Budget Optimization Necessary?

It depends on numerous factors; perhaps the most important is the size of your website. You don’t have to worry about the crawl budget if you have a small business with a modest website. However, optimizing your crawl budget is essential if your website has more than 1000 pages or you generate pages and URLs automatically.

7 Tips to Optimize Crawling & Indexing

To optimize your crawling sessions, follow these tips:

- Include XML sitemaps

An XML sitemap is a file that contains the dynamic pages of your website. It gives your website a structure and ensures that Google can find and crawl all the essential elements of your website. When working on your sitemap, remember that you want Google to find and crawl the essential components of your website. You don’t need to show Google every blog post you’ve written.

- Develop An Internal Linking Strategy

Internal links are hyperlinks that connect your web pages. For example, you can connect your blogs to your landing pages, services, product pages, etc. Internal links are important for your SEO strategies because they

- Help Google understand your site’s structure

- Help your visitors to navigate more effectively

- Remove Unnecessary & Duplicate Content

When you publish duplicate content, you hurt two things. The first is your own website, and the second is the original content. When Google finds two web pages with exactly the same content, it usually doesn’t know which one is the original and which one it should suggest to the user. This uncertainty then leads to a lower ranking of both web pages.

- Complement Your Visual Content With Texts

Visual content is usually the best substitute for large blocks of text. It also provides an effective way to convey information to your visitors quickly. But for search engines, the text is usually more effective!

Use alt tags for your images, as this is a good SEO practice. It is also important that your website is accessible to people with visual disabilities.

- Avoid Poor Server & Hosting Setup

Poor server and hosting services can cause your website to crash frequently. If there is a crawl rate limit (how frequently your site is crawled), Google bots will be prevented from accessing websites prone to crashing. In other words, crawlers often cannot “read” websites with poor hosting services.

- Use Robots.txt to Crawl Important Pages

robots.txt files tell Google crawlers which pages to crawl and which not. You should use the noindex tag in your robots.txt file to exclude any page from being crawled. These crawlers will likely skip any webpage with the tag.

Using the website’s robots.txt files provides an effective way to optimize the crawling process. You can use these files to block or allow any domain page. However, if your website has more than 10k pages, you should use a proper website audit tool.

- Improve Page Load Speeds

Google bots can read through a website quickly. However, if your website speed isn’t optimal, it can harm crawl efficacy optimization. The faster your web pages load, the more pages crawlers can read. A good loading speed can also lead to an increased crawl demand.

Final Thoughts

In short, the crawl budget doesn’t matter much compared to other critical factors affecting your SEO strategies. If you can create some URLs with all the critical SEO components – e.g., high-quality content and an efficient link network (internal/external) – then you can relax and let Google crawl them. Focus on crawl efficacy over time, and it’ll pay off. Get in touch and let GTECH help you make sure your landing pages get the best search engine results.

For more such blogs, visit GTECH, one of the best SEO agency UAE.

Related Post

Publications, Insights & News from GTECH